|

The MAX computer algebra seminar |  |

The MAX seminar takes place in the Alan Turing building at the campus of École polytechnique. Click here for more information on how to join us.

Upcoming talks

2025, December 1

TBA

Time: from 11h00 to 12h00

Room: Henri Poincaré

TBD

Past talks

2025, October 6

Fast multivariate integration in D-modules

Time: from 11h00 to 12h00

Room: Grace Hopper

Not all integrals can be expressed in closed form using elementary functions, as shown by Liouville's theorem. In contrast, the integral of a holonomic/D-finite function is always holonomic/D-finite, that is the integral of a function satisfying sufficiently many linear differential equations (LDEs) with polynomial coefficients also satisfies such a system of LDEs. This makes the holonomic and D-finite frameworks particularly relevant for symbolic integration.

I will address two central algorithmic problems in this field: the problem of integration with parameters, where one seeks a differential equation satisfied by a parametric integral, and the reduction problem, where the goal is to find linear relations between integrals. Two distinct approaches exist, the D-finite one and the holonomic one. The D-finite approach has been the most studied one and offers efficient algorithms, but it lacks the full expressivity of the holonomic setting, which can handle a broader class of integrals and particularly those over semi-algebraic sets. However, the current algorithms developed for the holonomic setting have a prohibitive computational cost. I will present a new reduction algorithm working in a mixed approach, aiming to balance the efficiency of D-finiteness with the expressivity of holonomy. This reduction is inspired by the Griffiths-Dwork method for rational functions and yields similarly an algorithm for the problem of parametric integration.

As an application, I will present the computation of a differential equation for the generating function of 8-regular graphs, which was out of reach so far.

This is a joint work with my PhD advisors

2025, September 29

Matryoshka Arithmetic

Time: from 11h00 to 12h00

Room: Grace Hopper

How to automatically determine reliable error bounds for a numerical computation? One traditional approach is to systematically replace floating point approximations by intervals or balls that are guaranteed to contain the exact numbers of interest. However, operations on intervals or balls are more expensive than operations on floating point numbers, so this approach involves a non-trivial overhead. In this talk, we present several approaches to remove this overhead, under the assumption that the function f that we wish to evaluate is given as a straight-line program (SLP). We will first study the case when the arguments of our function lie in fixed balls. For polynomial SLPs, we next consider the “global” case where this restriction on the arguments is removed.

Based on joint work with

2025, June 23

Projective variety recovery from unknown linear projections

Time: from 10h45 to 11h45

Room: Grace Hopper

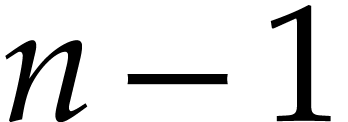

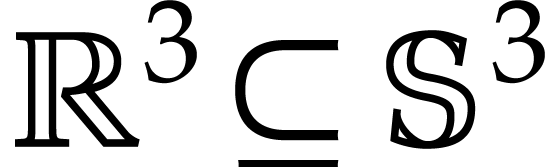

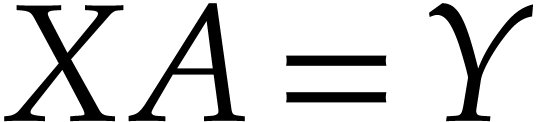

We study how a smooth irreducible algebraic variety  of dimension

of dimension  embedded in

embedded in  (with

(with  ), which degree is

), which degree is  ,

can be recovered using two projections from unknown points onto

unknown hyperplanes. The centers and the hyperplanes of projection are

unknown: the only input is the defining equations of each projected

varieties. We show how both the projection operators and the variety

in

,

can be recovered using two projections from unknown points onto

unknown hyperplanes. The centers and the hyperplanes of projection are

unknown: the only input is the defining equations of each projected

varieties. We show how both the projection operators and the variety

in  can be recovered modulo some action of the

group of projective transformations of

can be recovered modulo some action of the

group of projective transformations of  . This

configuration generalizes results obtained in the context of curves

embedded in

. This

configuration generalizes results obtained in the context of curves

embedded in  ([1]) and results concerning

surfaces embedded in

([1]) and results concerning

surfaces embedded in  ([2]).

([2]).

We show how in a generic situation, a characteristic matrix of the

pair of projections can be recovered. In the process we address

dimensional issues and as a result establish a necessary condition, as

well as a sufficient condition to compute this characteristic matrix

up to a finite-fold ambiguity. These conditions are expressed as

minimal values of the degree of the dual variety. Then we use this

matrix to recover the class of the couple of projections and as a

consequence to recover the variety. For a generic situation, two

projections define a variety with two irreducible components. One

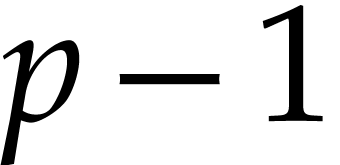

component has degree  and the other has degree

and the other has degree

, being the original variety.

, being the original variety.

[1]

[2]  from two generic linear projections,

In Proceedings of the 36th International Symposium on Symbolic and

Algebraic Computation (ISSAC), 2011.

from two generic linear projections,

In Proceedings of the 36th International Symposium on Symbolic and

Algebraic Computation (ISSAC), 2011.

2025, June 16

On the Existence of Principal Differential Ideals for Polynomial Derivations: Recent Advances and Computational Aspects

Time: from 11h00 to 12h00

Room: Henri Poincaré

We restrict ourselves to the (seemingly) simple settings of polynomial

derivations with a focus on the long standing open problems pertaining

to the existence of principal differential ideals and their concise

representation. Those particular ideals play an important theoretical

role in the integrability of polynomial dynamical systems and have

found several applications (e.g. theoretical physics) since their

introduction in the seminal work of Gaston Darboux, almost 150 years

ago. We show that the coefficients of the (unique) generator have to

satisfy underlying recurrences that can be made explicit. This key

insight shed some light on the structure of such objects: not only it

allows to reason about their existence, it also suggest a completely

novel approach for their effective computation. This is a joint work

with

2025, June 2

Resonances as a computational tool

Time: from 11h00 to 12h00

Room: Henri Poincaré

A large toolbox of numerical schemes for dispersive equations has been established, based on different discretization techniques such as discretizing the variation-of-constants formula (e.g., exponential integrators) or splitting the full equation into a series of simpler subproblems (e.g., splitting methods). In many situations these classical schemes allow a precise and efficient approximation. This, however, drastically changes whenever non-smooth phenomena enter the scene such as for problems at low regularity and high oscillations. Classical schemes fail to capture the oscillatory nature of the solution, and this may lead to severe instabilities and loss of convergence. In this talk I present a new class of resonance based schemes. The key idea in the construction of the new schemes is to tackle and deeply embed the underlying nonlinear structure of resonances into the numerical discretization. As in the continuous case, these terms are central to structure preservation and offer the new schemes strong geometric properties at low regularity.

2025, March 24

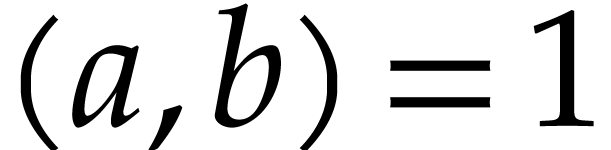

A computational proof of the Schmidt-Kolchin Conjecture

Time: from 11h00 to 12h00

Room: Grace Hopper

Recently solved by  . A free family of polynomials containing exactly this

number of elements was unraveled by

. A free family of polynomials containing exactly this

number of elements was unraveled by

We show how the polynomials found by

This is joint work with

2025, March 3

Elimination for Differential Dynamical Systems

Time: from 11h00 to 12h00

Room: Grace Hopper

Differential dynamical systems appear in many applications in modeling and control theory. Frequently, for such dynamical systems, the task is to compute the minimal differential equation satisfied by a chosen coordinate. This task arises in particular in applications when only part of the solution data can be experimentally observed. As such, it is an important special case of more general differential elimination problems.

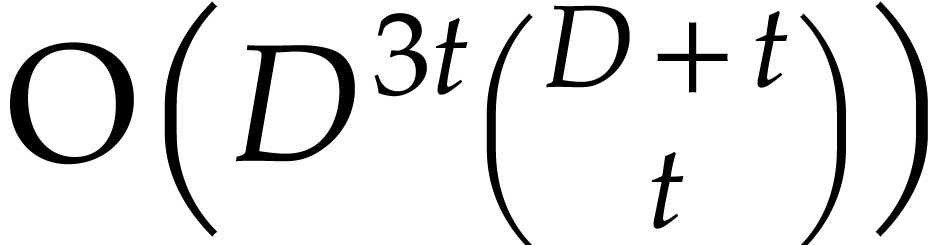

We present a new result, which provides explicit bounding hyperplanes

for the Newton polytope of such a minimal equation. The obtained

bounds are based on the degrees of a given dynamical system and are

proven to be sharp in “more than half the cases”. We

further use these bounds to design an algorithm for computing the

minimal equation following the evaluation-interpolation paradigm.

Using an implementation in Julia, we demonstrate that our

implementation of the algorithm can tackle problems which are out of

reach for the state-of-the-art software for differential elimination.

Lastly, we discuss potential future improvements to this algorithm

using sparse elimination theory. This is joint work with

2025, January 31

Fast basecases for arbitrary-size multiplication

Time: from 11h00 to 11h45

Room: Grace Hopper

(the talk is joint with the FLINT workshop, hence the unusual day of the week)

Multiple precision libraries like GMP typically use assembly-optimized loops for basecase operations on variable-length operands. We consider the alternative of generating lookup tables with hardcoded routines for many fixed sizes, e.g. for all multiplications up to 16 by 8 words. On recent ARM64 and x86-64 CPUs, we demonstrate up to a 2x speedup over GMP for basecase-sized multiplication. We pay special attention to the computation of approximate products and demonstrate up to a 3x speedup over GMP/MPFR for floating-point multiplication.

2024, December 16

Linear ordinary integro-differential systems: an algorithmic study

Time: from 11h00 to 12h00

Room: Grace Hopper

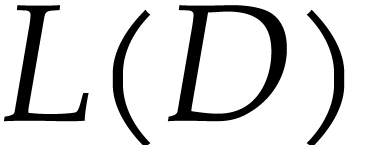

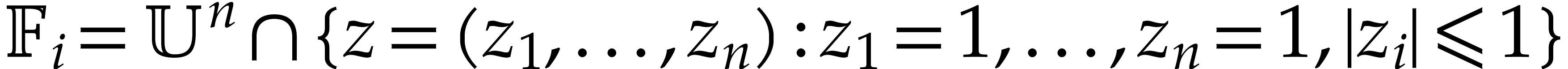

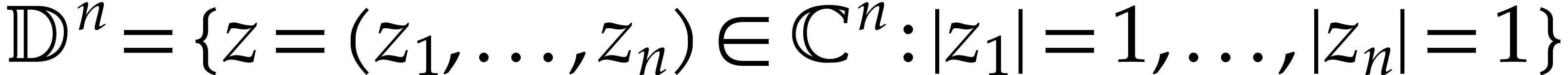

This talk aims to show that an algorithmic approach to linear polynomial ordinary integro-differential systems with separable kernels can be developed and implemented in a computer algebra system such as Maple.

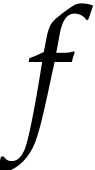

To do this, we will introduce the ring  of

ordinary integro-differential operators with polynomial coefficients.

This ring contains the Weyl algebra

of

ordinary integro-differential operators with polynomial coefficients.

This ring contains the Weyl algebra  of

ordinary differential operators with polynomial coefficients. Unlike

of

ordinary differential operators with polynomial coefficients. Unlike

,

,  has zero divisors and

is not Noetherian. However, in 2013,

has zero divisors and

is not Noetherian. However, in 2013,  is a coherent ring.

This result opens the possibility of an effective study of linear

polynomial integro-differential systems because these systems define

finitely presented left

is a coherent ring.

This result opens the possibility of an effective study of linear

polynomial integro-differential systems because these systems define

finitely presented left  modules and the

category of finitely presented modules over a coherent ring resembles

the category of finitely generated modules over a Noetherian ring.

However, the proof given by

modules and the

category of finitely presented modules over a coherent ring resembles

the category of finitely generated modules over a Noetherian ring.

However, the proof given by  can be obtained and

implemented. This result shows that the kernel of a matrix with

entries in

can be obtained and

implemented. This result shows that the kernel of a matrix with

entries in  can be computed effectively. Then,

an algorithmic elimination theory can be developed.

can be computed effectively. Then,

an algorithmic elimination theory can be developed.

Finally, we will show that  is effective in the

sense that linear systems of the form

is effective in the

sense that linear systems of the form  or

or  , where

, where  and

and  are two fixed matrices with entries in

are two fixed matrices with entries in  and

and

is an unknown matrix with entries in

is an unknown matrix with entries in  , are algorithmically solvable. Thus, methods of

homological algebra can be made effective and implemented, showing the

possibility of developing an algebraic analysis approach for this

class of linear functional systems.

, are algorithmically solvable. Thus, methods of

homological algebra can be made effective and implemented, showing the

possibility of developing an algebraic analysis approach for this

class of linear functional systems.

Explicit examples and calculations will illustrate the talk.

This work was done in collaboration with

2024, November 4

Computing Krylov iterates and characteristic polynomials in the time of matrix multiplication

Time: from 11h00 to 12h00

Room: Henri Poincaré

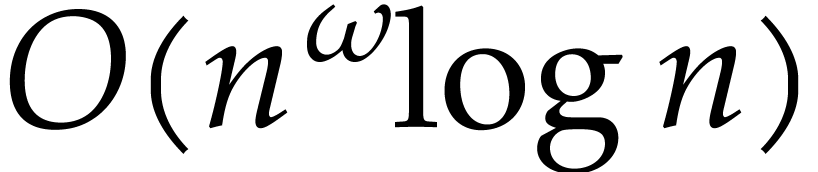

This talk describes recent work with  over a field, we will

describe two main algorithms. The first one computes the

characteristic polynomial within the same asymptotic complexity as the

multiplication of two

over a field, we will

describe two main algorithms. The first one computes the

characteristic polynomial within the same asymptotic complexity as the

multiplication of two  matrices, that is, in

matrices, that is, in

operations in the field. The second one takes

an additional list of

operations in the field. The second one takes

an additional list of  vectors and computes a

Krylov basis associated to

vectors and computes a

Krylov basis associated to  and these vectors,

also in

and these vectors,

also in  assuming

assuming  is

not too close to

is

not too close to  . These two related problems

had previously been solved in complexity

. These two related problems

had previously been solved in complexity  (

( rely on genericity assumptions

or randomization (

rely on genericity assumptions

or randomization (

2024, October 7

Discretization error with Euler's method: Upper bound and applications

Time: from 11h00 to 12h00

Room: Grace Hopper

Using the notion of (contractive) matrix measure, we give an upper bound on the discretization error with Euler's method. As applications, we give a method for ensuring the orbital stability of differential systems, and conditions on neural networks ensuring the convergence of the training error to 0.

2024, September 23

Puzzle Ideals for Grassmannians

Time: from 11h00 to 12h00

Room: Grace Hopper

Puzzles, first introduced by

This talk is based on the joint work with

2024, June 10

Partage de gâteaux sans-envie: une grande probabilité d'avoir un nombre de requêtes polynomial

Time: from 11h00 to 12h00

Room: Emmy Noether

Le problème du partage d'un gâteau entre  convives consiste à effectuer un découpage afin que

chaque convive reçoive sa «juste» part. Les

convives peuvent avoir des goûts différents et le

gâteau peut avoir plusieurs saveurs (chocolat, vanille, fraise,

etc.). Dans cet exposé, nous allons présenter comment le

problème du partage d'un gâteau peut être

modélisé et quel modèle de calcul est

utilisé pour étudier la complexité des

algorithmes. Nous verrons aussi qu'il existe différentes

notions de «juste part». Ensuite, nous verrons qu'il

existe un algorithme qui évite la jalousie entre les convives

et qui possède la qualité suivante: lorsque nous

considérons des convives «au hasard» alors la

probabilité de leur poser beaucoup de questions pour effectuer

le partage est petite.

convives consiste à effectuer un découpage afin que

chaque convive reçoive sa «juste» part. Les

convives peuvent avoir des goûts différents et le

gâteau peut avoir plusieurs saveurs (chocolat, vanille, fraise,

etc.). Dans cet exposé, nous allons présenter comment le

problème du partage d'un gâteau peut être

modélisé et quel modèle de calcul est

utilisé pour étudier la complexité des

algorithmes. Nous verrons aussi qu'il existe différentes

notions de «juste part». Ensuite, nous verrons qu'il

existe un algorithme qui évite la jalousie entre les convives

et qui possède la qualité suivante: lorsque nous

considérons des convives «au hasard» alors la

probabilité de leur poser beaucoup de questions pour effectuer

le partage est petite.

2024, May 27 (Postponed until the Fall)

Faster modular composition of polynomials

Time: from 11h00 to 12h00

Room: Grace Hopper

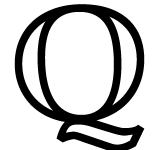

This talk is about algorithms for modular composition of univariate

polynomials, and for computing minimal polynomials. For two univariate

polynomials  and

and  over a

commutative field, modular composition asks to compute

over a

commutative field, modular composition asks to compute  for some given

for some given  , while the

minimal polynomial problem is to compute

, while the

minimal polynomial problem is to compute  of

minimal degree such that

of

minimal degree such that  . We propose

algorithms whose complexity bound improves upon previous algorithms

and in particular upon

. We propose

algorithms whose complexity bound improves upon previous algorithms

and in particular upon  and

and  even

when using cubic-time matrix multiplication. Our improvement comes

from the fast computation of specific bases of bivariate ideals, and

from efficient operations with these bases thanks to fast univariate

polynomial matrix algorithms. We will also report on experimental

results using the Polynomial Matrix Library.

even

when using cubic-time matrix multiplication. Our improvement comes

from the fast computation of specific bases of bivariate ideals, and

from efficient operations with these bases thanks to fast univariate

polynomial matrix algorithms. We will also report on experimental

results using the Polynomial Matrix Library.

Contains joint work with

The corresponding article may be found here or here.

2024, April 29

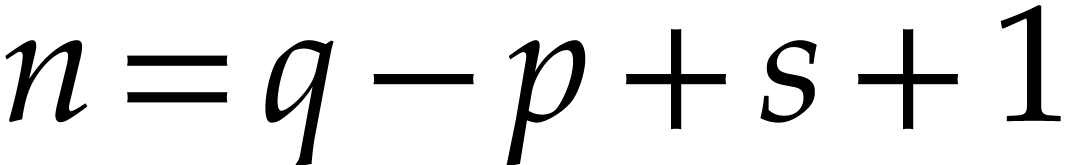

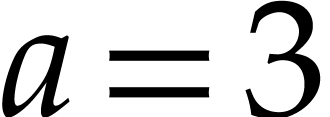

On the structure of differentially homogeneous polynomials

Time: from 11h00 to 12h00

Room: Grace Hopper

The goal of the talk is to discuss the structure of differentially

homogeneous polynomials in  variables, and its

interpretation. We will mainly talk about the structure of vector

space: it is a graded algebra, whose

variables, and its

interpretation. We will mainly talk about the structure of vector

space: it is a graded algebra, whose  -th graded

component has dimension

-th graded

component has dimension  . This was predicted by

the so-called Schmidt-Kolchin conjecture. We will also (briefly) talk

about the expected structure as an algebra: it is an ongoing work,

being finalized.

. This was predicted by

the so-called Schmidt-Kolchin conjecture. We will also (briefly) talk

about the expected structure as an algebra: it is an ongoing work,

being finalized.

2024, April 22

Factoring differential operators in positive characteristic through geometric means

Time: from 15h30 to 16h30

Room: Henri Poincaré

We focus on the porblem of factoring a given linear differential

operator, whose coefficients are elements of an algebraic function

field of characteristic  . After a brief

presentation of the previous works in that domain, in large part due

to

. After a brief

presentation of the previous works in that domain, in large part due

to  -Riccati

equation over a finite separable extension

-Riccati

equation over a finite separable extension  of

of

. We will show that solving this equation can

be done in polynomial time in

. We will show that solving this equation can

be done in polynomial time in  and the genus of

and the genus of

through the use of Riemann-Roch spaces and the

Divisor Class Group of

through the use of Riemann-Roch spaces and the

Divisor Class Group of  . If the time allows it,

we shall also present an irreducibility test relying on description of

the Brauer group of

. If the time allows it,

we shall also present an irreducibility test relying on description of

the Brauer group of  in terms of its local

Brauer groups. The irreducibility test can be done in polynomial time

in

in terms of its local

Brauer groups. The irreducibility test can be done in polynomial time

in  and the genus of

and the genus of  .

.

2024, March 25

Multigraded Castelnuovo-Mumford regularity and Groebner bases

Time: from 11h00 to 12h00

Room: Grace Hopper

Groebner bases (GBs) are the “Swiss Army knife” of

symbolic computations with polynomials. They are a special set of

generators of an ideal which allow us to manipulate extremely

complicated objects, so computing them is an intrinsically hard

problem. To estimate the complexity of such computations, an extended

approach is to bound the maximal degrees of the polynomials appearing

in the GBs. One of the most important results in this direction is due

to Bayer and Stillman, who showed in the 80s that, in generic

coordinates, the maximal degree of an element in a GB of an

homogeneous ideal with respect to the reverse lexicographical order is

determined by the Castelnuovo-Mumford regularity of the ideal —

an algebraic invariant independent of the GB. In this talk, I will

present a generalization of their results for multi-homogeneous

systems and show how the extension of the Castelnuovo-Mumford

regularity to multi-graded ideals relates to the maximal degrees

appearing in the computation of a GB. This talk is based on ongoing

work with

2024, March 18

An introduction to computer-assisted proofs via a posteriori validation

Time: from 11h00 to 12h00

Room: Grace Hopper

The goal of a posteriori validation methods is to get a quantitative and rigorous description of some specific solutions of nonlinear ODEs or PDEs, based on numerical simulations. The general strategy consists in combining a priori and a posteriori error estimates, interval arithmetic, and a fixed point theorem applied to a quasi-Newton operator. Starting from a numerically computed approximate solution, one can then prove the existence of a true solution in a small and explicit neighborhood of the numerical approximation.

I will first present the main ideas behind these techniques on a simple example, and then describe how they can be used for rigorously integrating some differential equations.

2024, March 11

On rule-based models of dynamical systems

Time: from 11h00 to 12h00

Room: Grace Hopper

Chemical reaction networks (CRN) constitute a standard formalism used in systems biology to represent high-level cell processes in terms of low-level molecular interactions. A CRN is a finite set of formal kinetic reaction rules with well-defined hypergraph structure and several possible dynamics. One CRN can be interpreted in a hierarchy of formal semantics related by either approximation or abstraction relationships, including

-

the differential semantics (ordinary differential equation),

-

stochastic semantics (continuous-time Markov chain),

-

probabilistic semantics (probabilistic Petri net forgetting about continuous time),

-

discrete semantics (Petri net forgetting about transition probabilities),

-

Boolean semantics forgetting about molecular numbers,

-

or just the hypergraph structure semantics.

We shall show how these different semantics come with different analysis tools which can reveal various dynamical properties of the other interpretations. In our CRN modeling software BIOCHAM (biochemical abstract machine), these static analysis tools are complemented by dynamic analysis tools based on quantitative temporal logic, and by an original CRN synthesis symbolic computation pipeline for compiling any computable real function in an elementary CRN over a finite set of abstract molecular species.

2024, February 26

Approximate flatness-based control via a case study: Drugs administration in some cancer treatments

Time: from 11h00 to 12h00

Room: Grace Hopper

We present some “in silico” experiments to design combined chemo- and immunotherapy treatment schedules. We introduce a new framework by combining flatness-based control, which is a model-based setting, along with model-free control. The flatness property of the used mathematical model yields straightforward reference trajectories. They provide us with the nominal open-loop control inputs. Closing the loop via model-free control allows to deal with the uncertainties on the injected drug doses. Several numerical simulations illustrating different case studies are displayed. We show in particular that the considered health indicators are driven to the safe region, even for critical initial conditions. Furthermore, in some specific cases there is no need to inject chemotherapeutic agents.

Joint work with

2023, December 8

Application of differential elimination for physics-based deep learning and computing

Time: from 14h00 to 15h00

Room: Darboux amphitheater, Institut Henri Poincaré (11 Rue Pierre et Marie Curie, Paris)

In the field of differential algebra, differential elimination refers to the elimination of specific variables and/or their derivatives from differential equations. One well-known application of differential elimination is in the model identifiability analysis. Recently, there has been a growing demand for further applications in addition to this.

In this talk, we introduce two recent applications in the field related to physics. The first application field is physics-based deep learning. In particular, we introduce the application of differential elimination for Physics-Informed Neural Networks (PINNs), which are types of deep neural networks integrating governing equations behind the data. The second application is about physics-based computing, or, physical computing. This refers to information processing leveraging the dynamics of physical systems such as soft materials and fluids. In this talk, we show the application of differential elimination to time-series information processing via physical computing.

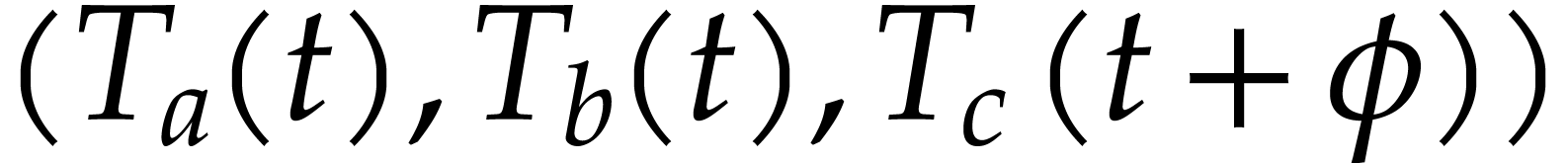

2023, April 24

Set-based methods for the analysis of dynamical systems

Time: from 11h00 to 12h00

Room: Grace Hopper

In this talk, I will describe some set-based methods (i.e.

«guaranteed» numerical methods) that we developed for

helping analyze and validate dynamical (and control) systems. I will

go through a number of problems, ranging from plain reachability,

reach-avoid, robust reachability to invariance and general temporal

specifications. The robust reachability and general temporal

specifications will introduce the problem of solving some form of

quantifier elimination, for which we will give a simple set-based

method for inner and outer-approximating the set of solutions. Based

on joint works with

2023, April 17

Calcul formel et diffusion chaotique des mouvements planétaires dans le système solaire

Time: from 11h00 to 12h00

Room: Henri Poincaré

La mise en évidence du mouvement chaotique des planètes dans le système solaire a été obtenu grâce à une intégration des équation modernisées de leur mouvement (Laskar, 1989). Ce système d'équations contenant plus de 150 000 termes avait été obtenu par des méthodes de calcul formel très dédiées, dont l'adaptation n'était pas aisée. depuis 1988 a commencé la construction d'un système de calcul formel général, TRIP, spécialement adapté aux calculs de la mécanique céleste. Nous avons utilisé ce système récemment pour obtenir une meilleure compréhension de l'origine du chaos dans le système solaire, et pour étudier la diffusion chaotique du mouvement des planètes sur des durées bien supérieures à l'âge de l'univers.

2023, March 27

Finding exact linear reductions of dynamical models

Time: from 11h00 to 12h00

Room: Grace Hopper

Dynamical models described by systems of polynomial differential equations are a fundamental tool in the sciences and engineering. Exact model reduction aims at finding a set of combinations of the original variables which themselves satisfy a self-contained system. There exist algorithmic solutions which are able to rapidly find linear reductions of the lowest dimension under certain additional constraints on the input or output.

In this talk, I will present a general algorithm for finding exact linear reductions. The algorithm finds a maximal chain of reductions by reducing the question to a search for invariant subspaces of matrix algebras. I will describe our implementation and show how it can be applied to models from literature to find reductions which would be missed by the earlier approaches.

This is a joint work with

2023, March 20

Using Algebraic Geometry for Solving Differential Equations

Time: from 11h00 to 12h00

Room: Henri Poincaré

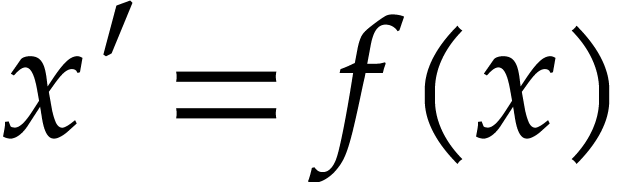

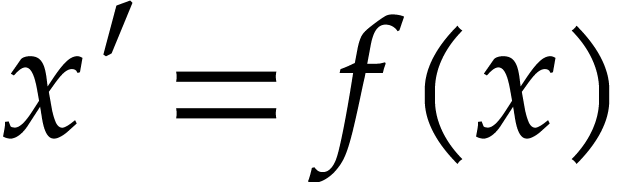

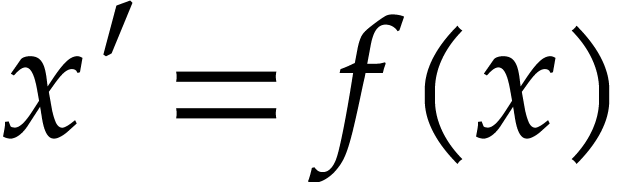

Given a first order autonomous algebraic ordinary

differential equation, i.e. an equation of the form  with polynomial F and complex coefficients, we

present a method to compute all formal power series solutions with

fractional exponents. By considering y and y′ as independent

variables, results from Algebraic Geometry can be applied to the

implicitly defined plane curve. This leads to a complete

characterization of initial values with respect to the number of

distinct solutions extending them. Furthermore, the computed formal

solutions are convergent in suitable neighborhoods and for any given

point in the complex plane there exists a solution of the

differential equation which defines an analytic curve

passing through this point. These results can be generalized to

systems of ODEs which implicitly define a space curve and to

local solutions with real coefficients only. This is a joint work

with

with polynomial F and complex coefficients, we

present a method to compute all formal power series solutions with

fractional exponents. By considering y and y′ as independent

variables, results from Algebraic Geometry can be applied to the

implicitly defined plane curve. This leads to a complete

characterization of initial values with respect to the number of

distinct solutions extending them. Furthermore, the computed formal

solutions are convergent in suitable neighborhoods and for any given

point in the complex plane there exists a solution of the

differential equation which defines an analytic curve

passing through this point. These results can be generalized to

systems of ODEs which implicitly define a space curve and to

local solutions with real coefficients only. This is a joint work

with

2023, February 20

Faster algorithms for symmetric polynomial systems

Time: from 11h00 to 12h00

Room: Philippe Flajolet

Many important polynomial systems have additional structure, for example, generating polynomials invariant under the action of the symmetric group. In this talk we consider two problems for such systems. The first focuses on computing the critical points of a polynomial map restricted to an algebraic variety, a problem which appears in many application areas including polynomial optimization. Our second problem is to decide the emptiness of algebraic varieties over real fields, a starting point for many computations in real algebraic geometry. In both cases we provide efficient probabilistic algorithms for which take advantage of the special invariant structure. In particular in both instances our algorithms obtain their efficiency by reducing the computations to ones over the group orbits and make use of tools such as weighted polynomial domains and symbolic homotopy methods.

2022, December 12

Session dedicated to the Computer Mathematics research group

Sparse interpolation

Time: from 11h00 to 12h00

Room: Grace Hopper

Computer algebra deals with exact computations with mathematical formulas. Often, these formulas are very large and often they can be rewritten as polynomials or rational functions in well chosen variables. Direct computations with such expressions can be very expensive and may lead to a further explosion of the size of intermediate expressions. Another approach is to systematically work with evaluations. For a given problem, like inverting a matrix with polynomial coefficients, evaluations of the solution might be relatively cheap to compute. Sparse interpolation is a device that can then be used to recover the result in symbolic form from sufficiently many evaluations. In our talk, we will survey a few classical and a new approach for sparse interpolation, while mentioning a few links with other subjects.

2022, December 5

Cache complexity in computer algebra

Time: from 11h00 to 12h00

Room: Grace Hopper

In the computer algebra literature, optimizing memory usage (e.g. minimizing cache misses) is often considered only at the software implementation stage and not at the algorithm design stage, which can result in missed opportunities, say, with respect to portability or scalability.

In this talk, we will discuss ideas for taking cache complexity, in combination with other complexity measures, at the algorithm design stage. We will start by a review of different memory models (I/O Complexity, cache complexity, etc.). We will then go through illustrative examples considering both multi-core and many-core architectures.

2022, November 14

Analytic combinatorics and partial differential equations

Time: from 11h00 to 12h00

Room: Grace Hopper

Many combinatorial structures and probabilistic processes lead to generating functions satisfying partial differential equations, and, in some cases, they even satisfy ordinary differential equations (they are D-finite).

In my talk, I will present results from these last 3 years illustrating this principle, and asymptotic consequences of this, on fundamental objects like Pólya urns, Young tableaux with walls, increasing trees, posets…

I will also prove that some cases are differentially algebraic (not D-finite), and possess surprising stretched exponential asymptotics involving exponents which are zeroes of the Airy function. The techniques are essentially extensions of the methods of analytic combinatorics (generating functions and complex analysis), as presented in the eponymous wonderful book of Flajolet and Sedgewick. En passant, I will also present a new algorithm for uniform random generation (the density method), and new universal distributions, establishing the asymptotic fluctuations of the surface of triangular Young tableaux.

This talk is based on joint work with Philippe Marchal and Michael Wallner:

Periodic Pólya urns, the density method, and asymptotics of Young tableaux, Annals of Probability, 2020.

Young tableaux with periodic walls: counting with the density method, FPSAC, 2021.

2022, November 7

Integral Equation Modelling and Deep Learning

Time: from 11h00 to 12h00

Room: Gilles Kahn

Considering models with integro-differential equations is motivated by the following reason: on some examples, the introduction of integral equations increases the expressiveness of the models, improves the estimation of parameter values from error-prone measurements, and reduces the size of the intermediate equations.

Reducing the order of derivation of a differential equation can sometimes be achieved by integrating it. An algorithm was designed by the CFHP team for that purpose. However, successfully integrating integro-differential equations is a complex problem. Unfortunately, there are still plenty of differential equations for which the algorithm does not apply. For example, computing an integrating factor might be required.

Rather than integrating the differential equation, we can perform an integral elimination. We are currently working on this technique which also implies some integration problems. To try to overcome these problems, we are also using deep learning techniques with the hope of finding small calculus tips.

2022, June 28

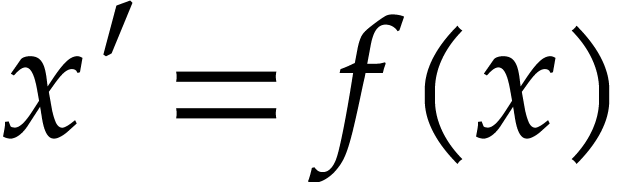

Exact nonlinear reductions of dynamical systems

Time: from 11h00 to 12h00

Room: Grace Hopper

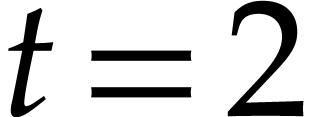

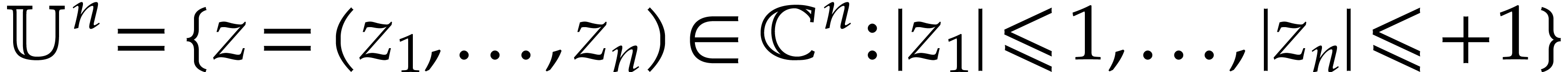

Dynamical systems of the form  are widely used

in many sciences. Numerical procedures, such as simulation and

parameter estimation do not work efficiently due to the high dimension

of the dynamical systems. We studied exact reductions of the system,

i.e., computing macro-variables that satisfy self-consistent

differential systems. Thanks to the software CLUE, we can reduce the

dimension of polynomial and rational dynamical systems by a linear

change of variables (i.e., macro-variables are linear combinations of

the original variables) preserving some linear quantities from the

original state variables. In this talk we are going to present a new

method to reduce the dimension of these dynamical systems even further

by performing a nonlinear change of variables. We are going to show

how to look for reductions of this type, how to preserve some

information from the original system and how to compute the new

self-consistent system that the new macro-variables satisfy.

are widely used

in many sciences. Numerical procedures, such as simulation and

parameter estimation do not work efficiently due to the high dimension

of the dynamical systems. We studied exact reductions of the system,

i.e., computing macro-variables that satisfy self-consistent

differential systems. Thanks to the software CLUE, we can reduce the

dimension of polynomial and rational dynamical systems by a linear

change of variables (i.e., macro-variables are linear combinations of

the original variables) preserving some linear quantities from the

original state variables. In this talk we are going to present a new

method to reduce the dimension of these dynamical systems even further

by performing a nonlinear change of variables. We are going to show

how to look for reductions of this type, how to preserve some

information from the original system and how to compute the new

self-consistent system that the new macro-variables satisfy.

2022, May 24

Session dedicated to the Computer Mathematics research group

Asymptotic Expansions with Error Bounds for Solutions of Linear

Recurrences

Time: from 11h00 to 12h00

Room: Grace Hopper

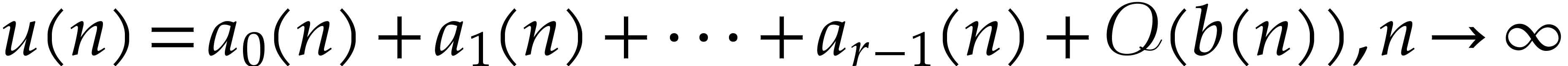

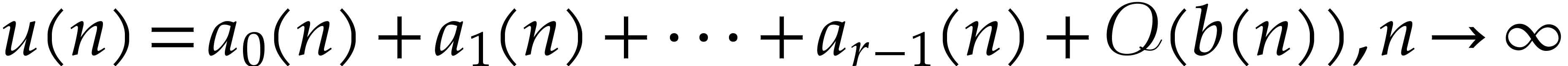

When a sequence  of real or complex numbers

satisfies a linear recurrence relation with polynomial coefficients,

it is usually routine to determine its asymptotic behavior for large

of real or complex numbers

satisfies a linear recurrence relation with polynomial coefficients,

it is usually routine to determine its asymptotic behavior for large

. Well-known algorithms and readily available

implementations are often able, given a recurrence satisfied by

. Well-known algorithms and readily available

implementations are often able, given a recurrence satisfied by  and some additional information on the particular

solution, to compute an explicit asymptotic expansion

and some additional information on the particular

solution, to compute an explicit asymptotic expansion

up to any desired order  . If, however, one

wishes to prove an inequality satisfied by

. If, however, one

wishes to prove an inequality satisfied by  for

all

for

all  , a big-Oh error term is not sufficient and

an explicit error bound on the difference between the sequence and its

asymptotic approximation is required. In this talk, I will present a

practical algorithm for computing such bounds, under the assumption

that the generating series of the sequence

, a big-Oh error term is not sufficient and

an explicit error bound on the difference between the sequence and its

asymptotic approximation is required. In this talk, I will present a

practical algorithm for computing such bounds, under the assumption

that the generating series of the sequence  is

solution of a differential equation with regular (Fuchsian) dominant

singularities. I will show how it can be used to verify the positivity

of a certain explicit sequence, which T. Yu and J. Chen recently

proved to imply uniqueness of the surface of genus one in

is

solution of a differential equation with regular (Fuchsian) dominant

singularities. I will show how it can be used to verify the positivity

of a certain explicit sequence, which T. Yu and J. Chen recently

proved to imply uniqueness of the surface of genus one in  of minimal bending energy when the isoperimetric ratio

is prescribed.

of minimal bending energy when the isoperimetric ratio

is prescribed.

Based on joint work with Ruiwen Dong and Stephen Melczer.

2022, May 10

Generalized flatness and motion planning for aircraft models

Time: from 11h00 to 12h00

Room: Grace Hopper

If one neglects the forces created by controls, an aircraft is modeled by a flat system. First, one uses a feedback to compensate model errors and keep the trajectory of the full non flat system close to the trajectory computed for the flat approximation. In a second time, the values of the controls provided by the flat approximation are used in the full model for better precision and the process is iterated, which provides a very precise motion planning for the full model. Some examples are provided to illustrate the possibilities of a Maple package.

This new approach is called generalized flatness. The provided

parametrization depends on an infinite number of flat outputs, which

comforts a conjecture claiming that all controllable systems are flat

if one allows parametrization depending on an infinite number of

derivatives. On should notice that the necessary flatness conditions

of

2022, April 19

Simultaneous Rational Function Reconstruction with Errors: Handling Poles and Multiplicities.

Time: from 11h00 to 12h00

Room: Grace Hopper

In this talk I focus on an evaluation-interpolation technique for reconstructing a vector of rational functions (with the same denominator), in presence of erroneous evaluations. This problem is also called Simultaneous Rational Function Reconstruction with errors (SRFRwE) and it has significant applications in computer algebra (e.g.for the parallel resolution of polynomial linear systems) and in algebraic coding theory (e.g. for the decoding of the interleaved version of the Reed-Solomon codes). Indeed, an accurate analysis of this problem leads to interesting results in both these scientific domains. Starting from the SRFRwE, we then introduce its multi-precision generalization, in which we include evaluations with certain precisions. Our goal is to reconstruct a vector of rational functions, given, among other information, a certain number of evaluation points. Thus, these evaluation points may be poles of the vector that we want to recover. Our multi-precision generalization also allows us to handle poles with their respective orders. The main goal of this work is to determine a condition on the instance of this SRFRwE problem (and its generalized version) which guarantee the uniqueness of the interpolating vector. This condition is crucial for the applications: in computer algebra it affects the complexity of the corresponding SRFRwE resolution algorithm, while in coding theory, it affects the number of errors that can be corrected. We determine a condition which allows us to correct any errors. Then we exploit and revisit results related to the decoding of interleaved Reed-Solomon codes in order to introduce a better condition for correcting random errors.

2021, December 14

Differential flatness for fractional order dynamic systems

Time: from 11h00 to 12h00

Room: Henri Poincaré

Differential flatness is a property of dynamic systems that allows the expression of all the variables of the system by a set of differentially independent functions, called flat output, depending on the variables of the system and their derivatives. The differential flatness property has many applications in automatic control theory, such as trajectory planning and trajectory tracking. This property was first introduced for the class of integer order systems and then extended to the class of fractional order systems. This talk will present the flatness of the fractional order linear systems and more specifically the methods for computing fractional flat outputs.

2021, December 7

msolve: a library for solving multivariate polynomial systems

Time: from 11h00 to 12h00

Room: Grace Hopper

In this talk, we present a new open source library, developed with J.

This talk will cover a short presentation of the current

functionalities provided by msolve, followed by an overview of the

implemented algorithms which will motivate the design choices

underlying the library. We will also compare the practical

performances of msolve with leading computer algebra systems such as

Magma, Maple, Singular, showing that msolve can tackle systems which

were out of reach by the computer algebra software state-of-the-art.

If time permits, we will report on new algorithmic developments for

ideal theoretic operations (joint work with J.

2021, October 19

Reconciling discrete and continuous modeling for the analysis of large-scale Markov chains

Time: from 11h00 to 12h00

Room: Grace Hopper

Markov chains are a fundamental tool for stochastic modeling across a wide range of disciplines. Unfortunately, their exact analysis is often hindered in practice due to the massive size of the state space - an infamous problem plaguing many models based on a discrete state representation. When the system under study can be conveniently described as a population process, approximations based on mean-field theory have proved remarkably effective. However, since such approximations essentially disregard the effect of noise, they may potentially lead to inaccurate estimations under conditions such as bursty behavior, separation of populations into low- and high-abundance classes, and multi-stability. This talk will present a new analytical method that combines an accurate discrete representation of a subset of the state space with mean-field equations to improve accuracy at a user-tunable computational cost. Challenging examples drawn from queuing theory and systems biology will show how the method significantly outperforms state-of-the-art approximation methods.

This is joint work with Luca Bortolussi, Francesca Randone, Andrea Vandin, and Tabea Waizmann.

2021, October 5

Linear PDE with constant coefficients

Time: from 11h00 to 12h00

Room: Henri Poincaré

I will present a work about practical methods for computing the space of solutions to a system of linear PDE with constant coefficients. These methods are based on the Fundamental Principle of Ehrenpreis–Palamodov from the 1960s which assert that every solution can be written as finite sum of integrals over some algebraic varieties. I will first present the main historical results and then recent algorithm for computing the space of solutions.

This is a joint work with Marc Harkonen and Bernd Sturmfels.

2021, September 28

DD-finite functions: a computable extension for holonomic functions

Time: from 11h00 to 12h00

Room: Grace Hopper

D-finite or holonomic functions are solutions to linear differential equations with polynomial coefficients. It is this property that allow us to exactly represent these functions on the computer. in this talk we present a natural extension of this class of functions: the DD-finite functions. These functions are the solutions of linear differential equations with D-finite coefficients. We will see the properties these functions have and how we can algorithmically compute with them.

2021, June 7

Réduction des intégrales premières des champs de vecteurs du plan

Time: from 14h00 to 15h00

Online broadcast

Les intégrales premières symboliques d'un champ de vecteur rationnel du plan sont de 4 types: rationnelles, darbouxiennes, liouvilliennes et de Riccati. Il est possible de les rechercher jusqu'à un certain degré, mais en trouver une ne signifie pas qu'il n'en existe pas d'autres plus simples mais de degré plus élevé. Le problème de Poincaré consiste à trouver les intégrales premières rationnelles d'un tel champ de vecteur. Nous y ajouterons l'hypothèse qu'une intégrale première symbolique est donnée d'avance, et il nous “suffira” ainsi de savoir si l'on peut la simplifier. Nous présenterons des algorithmes de simplification d'intégrales premières pour le cas Ricatti et liouvilien, et nous verrons, qu'à l'exception d'un cas particulier, cela est aussi possible pour le cas darbouxien. Nous détaillerons un exemple résistant et ses liens avec les courbes elliptiques.

2021, May 10

Algèbre différentielle et géométrie différentielle tropicale

Time: from 14h00 to 15h00

Online broadcast

Dans cet exposé, je ferai un lien entre l'algèbre différentielle de Ritt et Kolchin et la géométrie différentielle tropicale initiée par Grigoriev, sur la question de l'existence de solutions en séries entières formelles. Sur la fin de l'exposé, je m'attarderai sur un théorème d'approximation qui constitue la partie difficile du théorème fondamental de la géométrie différentielle tropicale.

2021, April 12

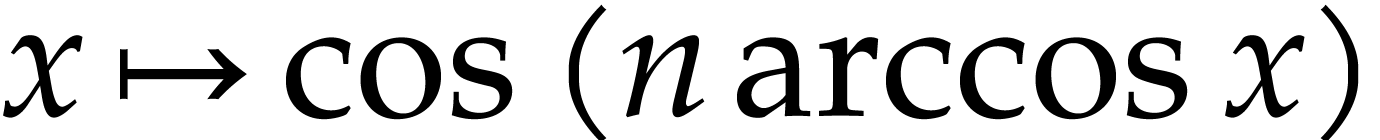

Sparse Interpolation in terms of multivariate Chebyshev polynomials

Time: from 15h00 to 16h00

Online broadcast: https://greenlight.lal.cloud.math.cnrs.fr/b/oll-ehe-mn3

Sparse interpolation refers to the exact recovery of a function as a short linear combination of basis functions from a limited number of evaluations. For multivariate functions, the case of the monomial basis is well studied, as is now the basis of exponential functions. Beyond the multivariate Chebyshev polynomial obtained as tensor products of univariate Chebyshev polynomials, the theory of root systems allows to define a variety of generalized multivariate Chebyshev polynomials that have connections to topics such as Fourier analysis and representations of Lie algebras. We present a deterministic algorithm to recover a function that is the linear combination of at most r such polynomials from the knowledge of r and an explicitly bounded number of evaluations of this function.

This is a joint work with Michael Singer (https://hal.inria.fr/hal-02454589v1).

Video of a talk on the same topic.

2021, March 29

Gröbner technology over free associative algebras over rings: semi-decidability, implementation and applications

Time: from 14h00 to 15h00

Online broadcast: https://webconf.math.cnrs.fr/b/pog-m6h-mec

Computations with finitely presented associative algebras traditionally boil down to computations over free associative algebras. In particular, there is a notion of Gröbner(–Shirshov) basis, but generally its computation does not terminate, and thus the ideal membership problem is not solvable. However, many important special cases can be approached.

The Letterplace correspondence for free algebras, introduced by La Scala and Levandovskyy, allows to reformulate the Gröbner theory and to use highly tuned commutative data structures in the implementation and to reuse parts of existing algorithms in the free non-commutative situation. We report on the newest official release of the subsystem of Singular called Letterplace. With it, we offer an unprecedented functionality, some of which for the first time in the history of computer algebra. In particular, we present practical tools for elimination theory (via truncated Gröbner bases and via supporting several kinds of elimination orderings), dimension theory (Gel'fand-Kirillov and global homological dimension), and for further homological algebra (such as syzygy bimodules and lifts for ideals and bimodules) to name a few.

Another activity resulted in the extension of non-commutative

Gröbner bases for supporting the coefficients in principal ideal

rings including  . Such computations are of big

value (for example, in representation theory), since the results can

be treated as universal for fields of arbitrary characteristic. Quite

nontrivial examples illustrate the powerful abilities of the system we

have developed and open the way for new applications.

. Such computations are of big

value (for example, in representation theory), since the results can

be treated as universal for fields of arbitrary characteristic. Quite

nontrivial examples illustrate the powerful abilities of the system we

have developed and open the way for new applications.

2021, March 22

Fast algorithm and sharp degree bounds for one block quantifier elimination over the reals

Time: from 14h00 to 15h00

Online broadcast

Quantifier elimination over the reals is one of the most important algorithmic problem in effective real algebraic geometry. It finds applications in several areas of computing and engineering sciences such as program verification, computational geometry, robotics and biology to cite a few. Geometrically, eliminating one block of quantifiers consists in computing a semi-algebraic formula which defines the projection of the set defined by the input polynomial constraints on the remaining set of variables (which we call parameters).

In this work, we design a fast algorithm computing a semi-algebraic

formula defining a dense subset in the interior of that projection

when the input is composed of a polynomial system of equations. Using

the theory of Groebner bases, we prove that, on generic

inputs, its complexity is  where

where  is the maximum degree in the output formulas and

is the maximum degree in the output formulas and  is the number of parameters. We provide a new

explicit bound on

is the number of parameters. We provide a new

explicit bound on  which improves the

state-of-the-art and make explicit the determinantal nature of these

formulas which make them easier to evaluate than the formulas

delivered by the previous algorithms. Our implementation shows the

practical efficiency of our approach as it allows us to tackle

examples which are out of reach of the state-of-the-art software.

which improves the

state-of-the-art and make explicit the determinantal nature of these

formulas which make them easier to evaluate than the formulas

delivered by the previous algorithms. Our implementation shows the

practical efficiency of our approach as it allows us to tackle

examples which are out of reach of the state-of-the-art software.

This is joint work with

2021, March 8

Surreal numbers with exponential and omega-exponentiation

Time: from 13h00 to 14h00

Online broadcast

Surreal numbers have been introduced by Conway while working on game theory: they allow to evaluate partial combinatorial games of any size! This is so because they consist in a proper class containing “all numbers great and small”, but also due to the richness of the structure we can endow them with: an ordered real closed field. Moreover, surreal numbers can be seen as formal power series with exp, log and derivation. This turns them into an important object also in model theory (universal domain for many theories) and real analytic geometry (formal counterpart for non oscillating germs of real functions).

In this talk, I will introduce these fascinating objects, starting with the very basic definitions, and will give a quick overview, with a particular emphasis on exp (which extends exp on the real numbers) and the omega map (which extends the omega-exponentiation for ordinals). This will help me to subsequently present our recent contributions with A. Berarducci, S. Kuhlmann and V. Mantova concerning the notion of omega-fields (possibly with exp). One of our motivations is to clarify the link between composition and derivation for surreal numbers.

2021, February 22

Primary ideals and differential operators

Time: from 14h00 to 15h00

Online broadcast

The main objective will be to describe primary ideals with the use of differential operators. These descriptions involve the study of several objects of different nature, so to say; the list includes: differential operators, differential equations with constant coefficients, Macaulay's inverse systems, symbolic powers, Hilbert schemes, and the join construction.

As an interesting consequence, we will introduce a new notion of differential powers which coincides with symbolic powers in many interesting non-smooth settings, and so it could serve as a generalization of the Zariski-Nagata Theorem. I will report on some joint work with Roser Homs and Bernd Sturmfels.

2020, December 1

Algebraic geometry codes from surfaces over finite fields

Time: from 15h00 to 16h00

Online broadcast

Let  be a polynomial ring in

be a polynomial ring in  variables with coefficients in a field

variables with coefficients in a field  of

characteristic zero. Consider a sequence of

of

characteristic zero. Consider a sequence of  polynomials

polynomials  in

in  and a

polynomial matrix

and a

polynomial matrix  in

in  with

with  rows and

rows and  columns

such that

columns

such that  is at most

is at most  and

and  . We are interested in the algebraic set

. We are interested in the algebraic set

of point in an algebraic closure of

of point in an algebraic closure of  at which all polynomials in

at which all polynomials in  and

all

and

all  -minors of

-minors of  vanish.

Such polynomial systems arise in a variety of applications including

for example polynomial optimization and computational geometry.

vanish.

Such polynomial systems arise in a variety of applications including

for example polynomial optimization and computational geometry.

We investigate the structures of  to provide

bounds on the number of isolated points in

to provide

bounds on the number of isolated points in  depending on the maxima of the degrees in rows (resp.

columns) of

depending on the maxima of the degrees in rows (resp.

columns) of  or the mixed volumes of column

supports of

or the mixed volumes of column

supports of  when we study the total degrees of

all polynomials

when we study the total degrees of

all polynomials  and all entries of

and all entries of  or when these polynomials are sparse. In addition, we

design probabilistic homotopy algorithms for computing the isolated

points with our algorithms running in time that is polynomial in the

bound on the number of isolated points. We also derive complexity

bounds for the particular but important case where

or when these polynomials are sparse. In addition, we

design probabilistic homotopy algorithms for computing the isolated

points with our algorithms running in time that is polynomial in the

bound on the number of isolated points. We also derive complexity

bounds for the particular but important case where  and the columns of

and the columns of  satisfy weighted degree

constraints. Such systems arise naturally in the computation of

critical points of maps restricted to algebraic sets when both are

invariant by the action of the symmetric group.

satisfy weighted degree

constraints. Such systems arise naturally in the computation of

critical points of maps restricted to algebraic sets when both are

invariant by the action of the symmetric group.

This is joint work with J.-C. Faugère, J. D. Hauenstein, G. Labahn, M. Safey El Din and É. Schost.

2020, November 24

Degroebnerization and error correcting codes: Half Error Locator

Polynomial

Time: from 15h00 to 16h00

Online broadcast

The concept of “Degroebnerization” has been introduced by Mora within his books on the bases of previous results by Mourrain, Lundqvist, Rouiller.

Since the computation of Gröbner bases is inefficient and

sometimes unfeasible, the Degroebnerization proposes to limit their

use to the cases in which it is really necessary, finding other tools

to solve problems that are classically solved by means of Gröbner

bases. We propose an example of such problems, dealing with efficient

decoding of binary cyclic codes by means of the locator polynomial.

Such a polynomial has variables corresponding to the syndromes, as

well as variables each corresponding to each error location. Decoding

consists then in evaluating the polynomial at the syndromes and

finding the roots in the corresponding variable. It is necessary to

look for a sparse polynomial, so that the evaluation is not too

inefficient. In this talk we show a preliminary result in this

framework; a polynomial of this kind can be found—for error

correction capability  —in a

degroebnerized fashion, and it has linear growth on the length of the

code. We will also give some hints on potential extensions to more

general codes.

—in a

degroebnerized fashion, and it has linear growth on the length of the

code. We will also give some hints on potential extensions to more

general codes.

2020, October 27

Algebraic geometry codes from surfaces over finite fields

Time: from 14h00 to 15h00, Room: Grace Hopper

Online broadcast: https://webconf.math.cnrs.fr/b/pog-4rz-uec

Algebraic geometry codes were introduced by Goppa in 1981 on curves defined over finite fields and were extensively studied since then. Even though Goppa construction holds on varieties of dimension higher than one, the literature is less abundant in this context. However, some work has been undertaken in this direction, especially on codes from surfaces. The goal of this talk is to provide a theoretical study of algebraic geometry codes constructed from surfaces defined over finite fields, using tools from intersection theory. First, we give lower bounds for the minimum distance of these codes in two wide families of surfaces: those with strictly-nef or anti-nef canonical divisor, and those which do not contain absolutely irreducible curves of small genus. Secondly, we specialize and improve these bounds for particular families of surfaces, for instance in the case of abelian surfaces and minimal fibrations, using the geometry of these surfaces.

The results appear in two joint works with Y. Aubry, F. Herbaut and M. Perret, preprints: https://arxiv.org/pdf/1904.08227.pdf and https://arxiv.org/abs/1912.07450.

2020, October 13

A new algorithm for finding the input-output equations of differential models

(Joint work with Christian Goodbreak, Heather Harrington, and Gleb Pogudin)

Time: from 14h00 to 15h00, Room: Gilles Kahn

The input-output equations of a differential model are consequences of the differential model which are also “minimal” equations depending only on the input, output, and parameter variables. One of their most important applications is the assessment of structural identifiability.

In this talk, we present a new resultant-based method to compute the input-output equations of a differential model with a single output. Our implementation showed favorable performance on several models that are out of reach for the state-of-the-art software. We will talk about ideas and optimizations used in our method as well as possible ways to generalize them.

2020, July 7

Computing Riemann–Roch spaces in subquadatric time

(Joint work with A. Couvreur and G. Lecerf)

Time: from 14h00 to 15h00, Room: teleconference

Given a divisor  (i.e. a formal sum of points

on a curve), the Riemann–Roch space

(i.e. a formal sum of points

on a curve), the Riemann–Roch space  is

the set of rational functions whose poles and zeros are constrained by

is

the set of rational functions whose poles and zeros are constrained by

. These spaces are important objects in

algebraic geometry and computing them explicitly has applications in

various fields: diophantine equations, symbolic integration,

arithmetic in Jacobians of curves and error-correcting codes.

. These spaces are important objects in

algebraic geometry and computing them explicitly has applications in

various fields: diophantine equations, symbolic integration,

arithmetic in Jacobians of curves and error-correcting codes.

Since the 1980's, many algorithms were designed to compute Riemann–Roch paces, incorporating more and more efficient primitives from computer algebra. The state-of-the-art approach ultimately reduces the problem to linear algebra on matrices of size comparable to the input.

In this talk, we will see how one can replace linear algebra by structured linear algebra on polynomial matrices of smaller size. Using a complexity bound due to Neiger, we design the first subquadratic algorithm for computing Riemann–Roch spaces.

2020, June 23

Exact model reduction by constrained linear lumping

(Joint work with A. Ovchinnikov, I.C. Perez Verona, and M. Tribastone)

Time: from 14h00 to 15h00, Room: teleconference

We solve the following problem: given a system of ODEs with polynomial right-hand side, find the maximal exact model order reduction by a linear transformation that preserves the dynamics of user-specified linear combinations of the original variables. Such transformation can reduce the dimension of a model dramatically and lead to new insights about the model. We will present an algorithm for solving the problem and applications of the algorithm to examples from literature. Then I will describe directions for further research and their connections with the structure theory of finite-dimensional algebras.

2020, June 9

Asymptotic differential algebra

Time: from 14h00 to 15h00, Room: teleconference

I will describe a research program put forward by Joris van der Hoeven and his frequent co-authors Lou van den Dries and Matthias Aschenbrenner, in the framework of asymptotic differential algebra. This program sets out to use formal tools (log-exp transseries) and number theoretic / set-theoretic tools (surreal numbers) to select and study properties of “monotonically regular” real-valued functions.

2020, May 26

Counting points on hyperelliptic curves defined over finite fields

of large characteristic: algorithms and complexity

Time: from 14h00 to 15h00, Room: teleconference

Counting points on algebraic curves has drawn a lot of attention due

to its many applications from number theory and arithmetic geometry to

cryptography and coding theory. In this talk, we focus on counting

points on hyperelliptic curves over finite fields of large

characteristic  . In this setting, the most

suitable algorithms are currently those of Schoof and Pila, because

their complexities are polynomial in

. In this setting, the most

suitable algorithms are currently those of Schoof and Pila, because

their complexities are polynomial in  . However,

their dependency in the genus

. However,

their dependency in the genus  of the curve is

exponential, and this is already a problem even for

of the curve is

exponential, and this is already a problem even for  .

.

Our contributions mainly consist of establishing new complexity bounds

with a smaller dependency in  of the exponent

of

of the exponent

of  . For hyperelliptic curves, previous work

showed that it was quasi-quadratic, and we reduced it to a linear

dependency. Restricting to more special families of hyperelliptic

curves with explicit real multiplication (RM), we obtained a constant

bound for this exponent.

. For hyperelliptic curves, previous work

showed that it was quasi-quadratic, and we reduced it to a linear

dependency. Restricting to more special families of hyperelliptic

curves with explicit real multiplication (RM), we obtained a constant

bound for this exponent.

In genus 3, we proposed an algorithm based on those of Schoof and Gaudry–Harley–Schost whose complexity is prohibitive in general, but turns out to be reasonable when the input curves have explicit RM. In this more favorable case, we were able to count points on a hyperelliptic curve defined over a 64-bit prime field.

In this talk, we will carefully reduce the problem of counting points to that of solving polynomial systems. More precisely, we will see how our results are obtained by considering either smaller or structured systems and choosing a convenient method to solve them.

2020, May 12

Shortest paths… from Königsberg to the tropics

Time: from 14h00 to 15h00, Room: teleconference

Trying to avoid technicalities, we underline the contribution of

Jacobi to graph theory and shortest paths problems. His starting point

is the computation of a maximal transversal sum in a square  matrix, that is choosing

matrix, that is choosing  terms

in each row and each column. This corresponds to the tropical

determinant, which was so discovered in Königsberg, on the shores

of the Baltic.

terms

in each row and each column. This corresponds to the tropical

determinant, which was so discovered in Königsberg, on the shores

of the Baltic.

The key ingredient of Jacobi's algorithm for computing the tropical determinant is to build paths between some rows of the matrix, until all rows are connected, which allows to compute a canon, that is a matrix where one can find maximal terms in their columns, located in all different rows, by adding the same constant to all the terms of the same row.

Jacobi gave two algorithms to compute the minimal canon, the first when one already knows a canon, the second when one knows the terms of a maximal transversal sum. They both correspond to well known algorithms for computing shortest paths, the Dijkstra algorithm, for positive weights on the edges of the graph, and the Bellman–Ford algorithm, when some weights can be negative.

After a short tour with Euler over the bridges of Königsberg, a reverence to Kőnig's, Egerváry's and Kuhn's contributions, we will conclude with the computation of a differential resolvent.

2020, April 28

Interval Summation of Differentially Finite Series

Time: from 14h00 to 15h00, Room: teleconference

I will discuss the computation of rigorous enclosures of sums of power series solutions of linear differential equations with polynomial coefficients ("differentially finite series"). The coefficients of these series satisfy simple recurrences, leading to a simple and fast iterative algorithm for computing the partial sums. When however the initial values (and possibly the evaluation point and the coefficients of the equation) are given by intervals, naively running this algorithm in interval arithmetic leads to an overestimation that grows exponentially with the number of terms. I will present a simple (but seemingly new!) variant of the algorithm that avoids interval blow-up. I will also briefly talk about other aspects of the evaluation of sums of differentially finite series and a few applications.

2020, April 7

Identifiability from multiple experiments

(Joint work with A. Ovchinnikov, A. Pillay, and T. Scanlon)

Time: from 14h00 to 15h00, Room: teleconference

For a system of parametric ODEs, the identifiability problem is to find which functions of the parameters can be recovered from the input-output data of a solution assuming continuous noise-free measurements.

If one allows to use several generic solutions for the same set of parameter values, it is natural to expect that more functions become identifiable. Natural questions in this case are: which function become identifiable given that enough experiments are performed and how many experiments would be enough? In this talk, I will describe recent results on these two questions.

2020, March 10

Computing linearizing outputs of a flat system

(Joint work with Jean Lévine (Mines de Paris) and Jeremy Kaminski (Holon Institute of Technology))

Time: from 14h to 15h, Room: Flajolet

A flat system is a differential system of positive differential

dimension, that is a system with controls for the control theorist,

such that its general solution can be parametrized on some dense open

set, using  arbitrary functions, called

“linearizing outputs”. The complementary of this open set

corresponds to singular points for flatness. There also exist points

for which classical flat outputs are singular, but for which

alternative flat outputs may work, meaning that these are

“apparent” singularities.

arbitrary functions, called

“linearizing outputs”. The complementary of this open set

corresponds to singular points for flatness. There also exist points

for which classical flat outputs are singular, but for which

alternative flat outputs may work, meaning that these are

“apparent” singularities.

We present available methods to compute linearizing outputs of a flat system, with a special interest to apparent singularities: how to design, assuming it exists, regular alternative flat outputs. We will consider, as examples, classical models of quadcopters and planes.

2019, November, 18

Une preuve élémentaire de l'inégalité de Bézout de Heintz

Time: from 14h to 16h, Room: Grace Hopper

À l'occasion de la rédaction d'un texte sur l'algorithme « Kronecker » accessible à une large audience, nous avons été amenés à écrire une preuve complète de l'inégalité de Bézout de Heintz pour les variétés algébriques avec des arguments mathématiques disons élémentaires. Dans le même temps nous fournissons une extension de cette inégalité pour les ensembles constructibles, ainsi que quelques applications nouvelles à certains problèmes algorithmiques. Cet exposé présentera les grandes lignes de ces preuves élémentaires. Les applications, vers la fin de l'exposé, seront liées a quelques idées nouvelles autour des ensembles questeurs ("correct test sequences") pour les systèmes d'équations polynomiales.

2019, June, 24

How to get an efficient yet verified arbitrary-precision integer library

(Joint work with Guillaume

Time: 14h, Room: Grace Hopper

We present a fully verified arbitrary-precision integer arithmetic library designed using the Why3 program verifier. It is intended as a verified replacement for the mpn layer of the state-of-the-art GNU Multi-Precision library (GMP).

The formal verification is done using a mix of automated provers and user-provided proof annotations. We have verified the GMP algorithms for addition, subtraction, multiplication (schoolbook and Toom-2/2.5), schoolbook division, and divide-and-conquer square root. The rest of the mpn API is work in progress. The main challenge is to preserve and verify all the GMP algorithmic tricks in order to get good performance.

Our algorithms are implemented as WhyML functions. We use a dedicated memory model to write them in an imperative style very close to the C language. Such functions can then be extracted straightforwardly to efficient C code. For medium-sized integers (less than 1000 bits, or 100,000 for multiplication), the resulting library is performance-competitive with the generic, pure-C configuration of GMP.

2019, June, 3

On singularities of flat affine systems with  states and

states and  controls

controls

(Joint work with Jean

Time: 11h, Room: Grace Hopper

We study the set of intrinsic singularities of flat affine systems

with  controls and

controls and  states using the notion of Lie-Bäcklund atlas, previously

introduced by the authors. For this purpose, we prove two easily

computable sufficient conditions to construct flat outputs as a set of

independent first integrals of distributions of vector fields, the

first one in a generic case, namely in a neighborhood of a point where

the

states using the notion of Lie-Bäcklund atlas, previously

introduced by the authors. For this purpose, we prove two easily

computable sufficient conditions to construct flat outputs as a set of

independent first integrals of distributions of vector fields, the

first one in a generic case, namely in a neighborhood of a point where